In the previous article, we explained how to use Google Search Console (GSC) and Screaming Frog (SF) to perform a technical SEO audit. One part was willingly left aside: structured data. The great advantage of using Screaming Frog to audit your structured data is that with one crawl, you gather all the errors for structured data that Google is flagging. By doing this, you can spot all the rich results that are not valid and negatively impact the visibility of your domains in the SERPs, and consequently, your click-through rate.

In this article, you will discover how to enable large-scale identification of issues in your structured data.

Spot Structured Data Errors in Your Search Console

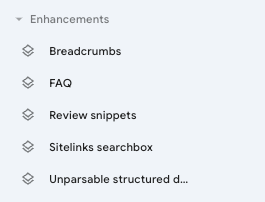

In the “Enhancements” section of Google Search Console (GSC), you will find the Structured Data section. Errors will be highlighted in red.

Quickly Find All Structured Data Errors Using Screaming Frog

Based on my experience, even though Schema.org was founded by Google, Microsoft, Yahoo, and Yandex, there might sometimes be misalignments between its validation tool and Google’s Rich Result validation tool. Hence, always ensure your structured data passes validation in the latter tool.

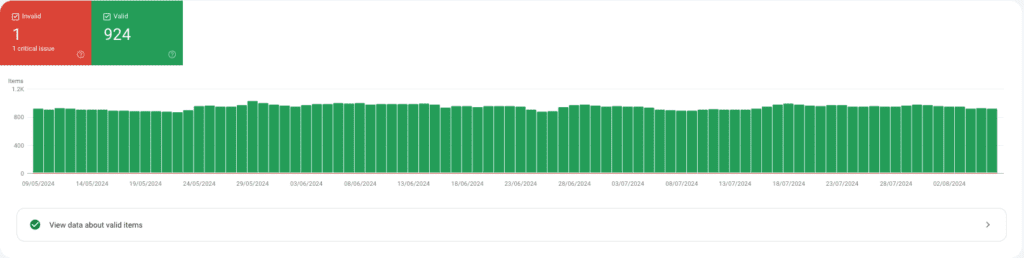

When your structured data does not pass validation and gets implemented, it is reported on your GSC as invalid, as displayed in the image below.

Quickly Find All Structured Data Errors Using Screaming Frog

A quicker trick to get the same data more efficiently is by using Screaming Frog (SF). This allows you to see all the errors and detect the ones Google is identifying as errors in a unique Excel sheet.

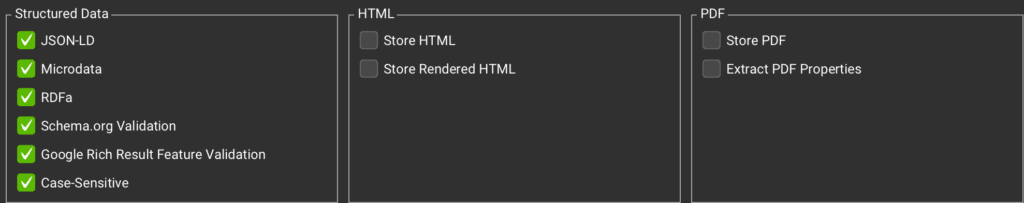

To enable this option in Screaming Frog before crawling, go to “Configuration > Spider > Extraction.” From there, enable ‘JSON-LD’, ‘Microdata’, ‘RDFa’, ‘Schema.org Validation’, and ‘Google Rich Result Feature Validation’ from the Spider configurations.

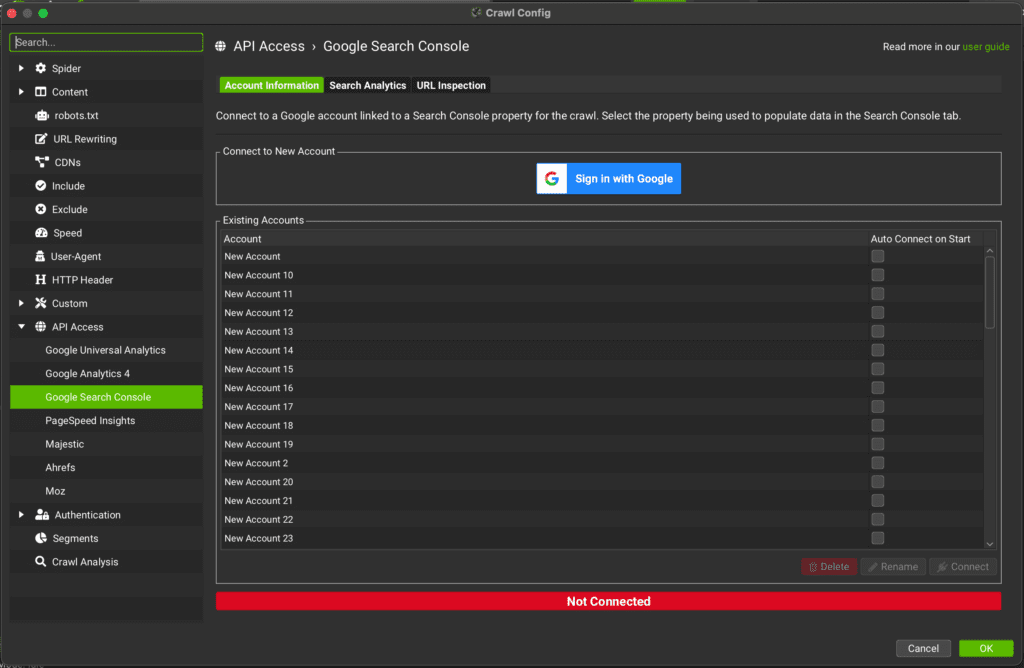

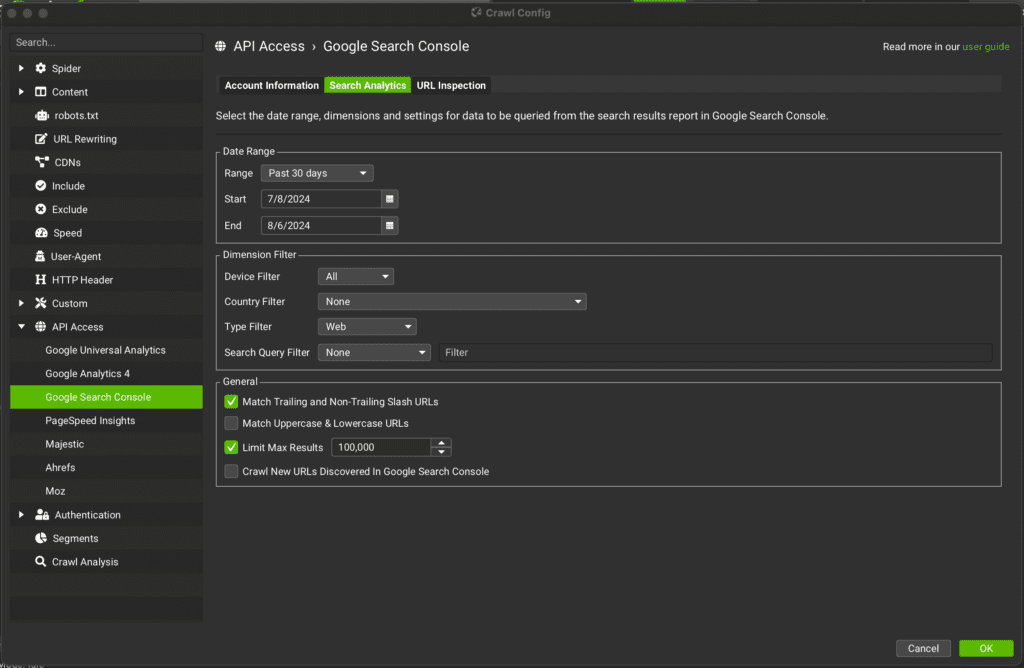

In the tab “API Access” > “Google Search Console” > “Sign in with Google” > choose your property.

Select the date range, as well as the country if your target audience is specific to a country. Do not forget to connect to the URL inspection API before starting your crawl.

Do not skip the API connection. Since SF classifies all Schema.org problems as errors, avoiding connecting the API would lead to wrong conclusions and make prioritization much more difficult.

Once you are all set, just start the crawl and wait for your results.

Overview of the Structured Data from Screaming Frog Tab

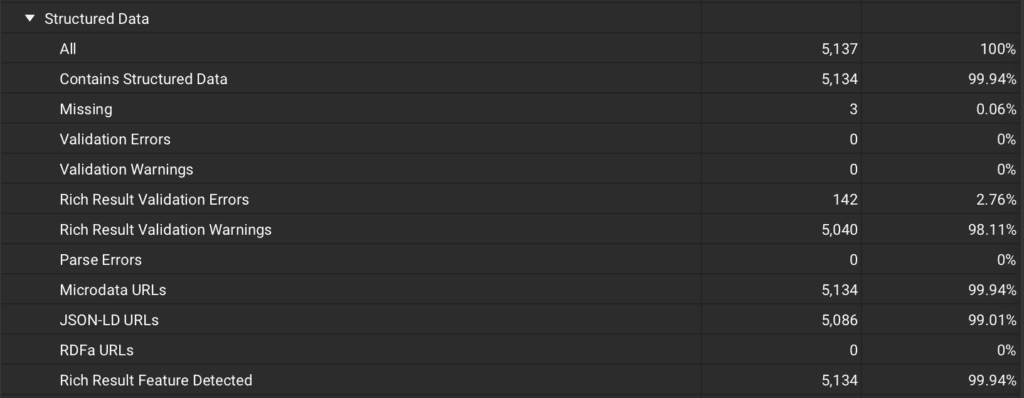

In the SF overview tab, by scrolling down, you will get a quick understanding of your structured data.

Pay close attention to “Rich results validation errors.” Based on my experience, the “Rich results validation warnings” can be ignored as it refers to information that could be added. Often, even though documentation suggests additional information could be added to the markup, it was likely not added as it was not available or relevant for your business.

How to Analyze Your Exported Data

After exporting the data into a Google Sheet, focus on the column “Rich Result Errors,” and sort the data by the highest number of errors. Before focusing on the error, check the listed URLs.

Are they using the same template? If so, contact your developers to ensure the issue is fixed at the template level. In this way, you will not only solve the current issue but also prevent future errors. Use the Google Sheet to create a list of the URLs and explain the issue.

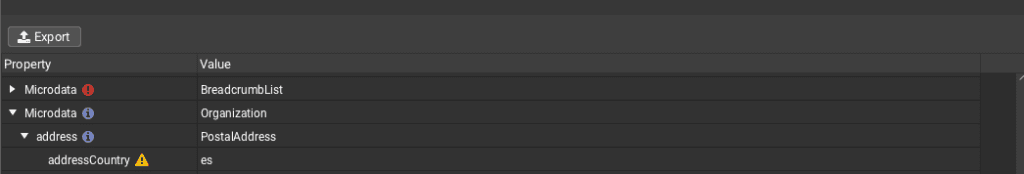

When looking at the Google Sheet, do not be alarmed by the long list of URLs. To know in which structured data there is an error, use SF, select a URL from your list of Rich Result Validation errors, and check the details in the Structured Data Details.

From the Google Sheet, create an action plan to solve the structured data errors. Prioritize any implementation that can be made at a technical level and in bulk.

Final Thoughts

As we have seen, it is possible to quickly gain insights into issues and opportunities for your domain’s structured data. Save the crawl settings you just created or open your previous crawl and run it again to ensure constant monitoring of your structured data. Regular audits and updates are crucial to maintaining the health and performance of your structured data, ultimately improving your site’s visibility and click-through rate in search results.